The Importance of Copyediting and Proofreading

February 27, 2024

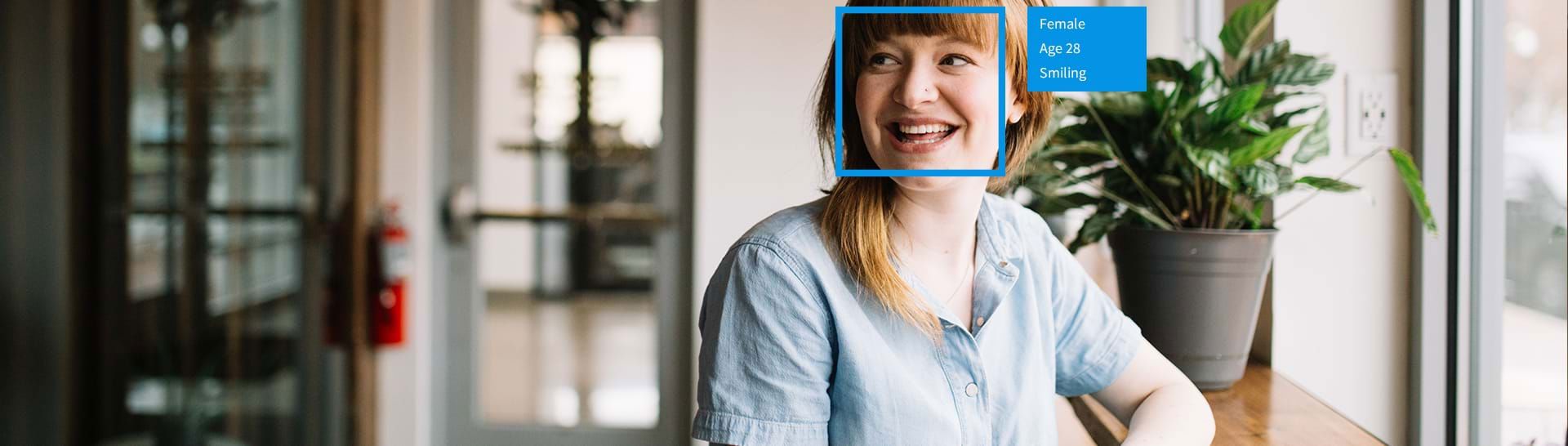

Building a Basic Facial Recognition Application Using The Microsoft Cognitive Services Face API

Introduction

In April 2015, Microsoft announced Project Oxford - a suite of APIs that were available for developers. In March 2016, Project Oxford was rebranded into Microsoft Cognitive Services. And finally, in April 2017, the Face API along with several other APIs became generally available (GA) in Cognitive Services.

Microsoft Cognitive Services is a suite of 25+ APIs that can be used to provide a wide variety of advanced functionality. This article will provide a short how-to for building a basic facial recognition application using the Face API.

Disclaimer

The purpose of this article is to provide a quick demonstration of using the Face API. It’s not intended to explore all the functionality of the Face API or Cognitive Services. Additionally, the code samples provided, while functional, are provided for reference and are not production-ready code.

Overview

Before we get to the code, let’s understand what’s necessary for this basic facial recognition application to function.

To perform facial recognition, you need something with which to compare the image. When using the Face API, this is a collection of people called a ‘PersonGroup’. Each PersonGroup may contain up to 1000 or 10,000 people depending on your FaceAPI subscription level. Each person in the PersonGroup is represented by a ‘Person’ entity (each with its own PersonId), and each Person can have up to 248 Faces (reference images associated with that Person entity). When all the faces and persons have been added to a PersonGroup, there’s one additional step before you’re ready to proceed. You need to call an additional API to ‘Train’ the group. Once the training is complete, you’re ready to go. All this preparation is needed before you get to the Facial Recognition application. The first code sample (below) will demonstrate the process of building out a PersonGroup.

Once we have our people and faces stored in Azure, we can move to the Facial Recognition part of this process. These are the steps for performing Facial recognition…

- The application submits an image to the Face API using the Detect() method. The image will be analyzed and any faces detected will be stored in Azure for 24 hours using a unique FaceId (a guid) that is returned to the calling application. The Detect method will detect up to 64 faces.

- With a list of FaceIDs, we can now use the Identify() method to compare our image faces to those in our PersonGroup. (Note: While the Detect method will return up to 64 faces, the Identify method will only accept up to 10 FaceIDs for facial matching.)

- And finally, if there’s a match, we can request the Person data for the appropriate Person entity.

Building the Applications

There are 3 Steps to building a Facial Recognition Application:

- Create a subscription to the Face API in your Azure account

- Populate a PersonGroup with Names (Person) and Images (Faces)

- Build the facial recognition application

Step 1 – Login to your Microsoft Azure account and add a Face API subscription.

In the Azure portal, add a subscription to the Face API.

Step 2 – Populate a PersonGroup

Strictly speaking, the Face API is a REST API, but there’s a great nuget package (Microsoft.ProjectOxford.Face) that wraps all the interactions into an easy-to-use FaceServiceClient class.

using Microsoft.ProjectOxford.Face.Contract;

using System;

using System.IO;

using System.Linq;

using System.Threading;

namespace AddPerson

{

class Program

{

// PersonGroup is named ‘marathon’

const string GroupName = "marathon";

const string apikey = "xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx";

static string name = "";

// AddPerson "Name" "PathToReferenceImage"

static void Main(string[] args)

{

// load the first argument into the name

// this is the name of the person we're about to store in our PersonGroup

name = args[0];

// Load the image into memory (second argument)

var stream = new MemoryStream(File.ReadAllBytes(args[1]));

var client = new FaceServiceClient(apikey);

// if needed, create the group

var groups = client.ListPersonGroupsAsync().Result;

if (!groups.Any( g => g.Name == GroupName))

{

client.CreatePersonGroupAsync(GroupName, GroupName).Wait();

}

Guid personId;

// Get the current list of people if the group

var people = client.GetPersonsAsync(GroupName).Result;

Person person;

if (!people.Any( p => p.Name.ToLower() == name.ToLower()))

{

// Add our person (and get his/her Id)

personId = client.CreatePersonAsync(GroupName, name).Result.PersonId;

}

else

{

person = people.First(p => p.Name.ToLower() == name.ToLower());

// delete the old face if there is one

if (person.PersistedFaceIds.Length > 0)

{

client.DeletePersonFaceAsync(GroupName, person.PersonId, person.PersistedFaceIds[0]).Wait();

}

personId = person.PersonId;

}

// Add the image to the person

client.AddPersonFaceAsync(GroupName, personId, stream).Wait();

// Tell the group to train the group

TrainingStatus trainingStatus;

client.TrainPersonGroupAsync(GroupName).Wait();

// Wait until training is complete

while (true)

{

trainingStatus = client.GetPersonGroupTrainingStatusAsync(GroupName).Result;

if (trainingStatus.Status != Microsoft.ProjectOxford.Face.Contract.Status.Running) break;

Thread.Sleep(5000);

}

Console.WriteLine($"Training complete:{trainingStatus.Status.ToString()}");

Console.ReadKey();

}

}

}

Step 3 - Facial recognition in 7 lines of code…

Using the same Microsoft.ProjectOxford.Face nuget package, we can write the facial recognition application in just 7 lines of code.

using Microsoft.ProjectOxford.Face;

using System;

using System.IO;

using System.Linq;

namespace Identify

{

class Program

{

//

// Using the Azure Cognitive Services Face API or

// How to write a facial recognition application in 7 lines of code.

//

const string GroupName = "marathon";

const string apikey = "xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx";

static void Main(string[] args)

{

try

{

using (var stream = new FileStream(args[0], FileMode.Open))

{

var client = new FaceServiceClient(apikey);

var faces = client.DetectAsync(stream).Result;

var identities = client.IdentifyAsync(GroupName, faces.Select(face => face.FaceId).Take(10).ToArray()).Result;

identities.ToList().ForEach(i =>

{

var person = client.GetPersonAsync(GroupName, i.Candidates[0].PersonId).Result;

Console.WriteLine(person?.Name ?? "Unknown");

});

}

}

catch (Exception ex)

{

Console.WriteLine("No faces found.");

}

Console.ReadKey();

}

}

}

Summary

This article has presented a basic overview of the Face API and how quickly you can develop a basic facial recognition application. The Face API is just one of the 25+ APIs available in the Cognitive Services API that include Speech Recognition (voice identification), Computer Vision, Bing Speech (Speech to Text / Text to Speech), Translator Speech, and many others. Using Microsoft Cognitive Services, developers can focus on creating advanced, cloud-connected applications that provide functionality that previously unavailable to them.

Happy coding.